Imagine a theatre where every spotlight, sound cue, and prop appears exactly when needed—no more, no less. The stagehands aren’t waiting backstage all night; they spring into action only when their cue arrives, perform flawlessly, and disappear until the next scene. This is the philosophy of serverless architecture: compute resources appearing only when summoned, executing precisely what’s required, and vanishing when the curtain falls.

In this world, developers no longer manage servers or scale infrastructure. Instead, they design event-driven functions that react to triggers—API requests, database updates, or messages from queues. But like any finely choreographed production, smooth performance requires more than just spontaneity. Behind the scenes, operational patterns ensure that functions start fast, costs remain predictable, and orchestration flows without disruption.

The Pulse of Serverless: Event-Driven Deployments

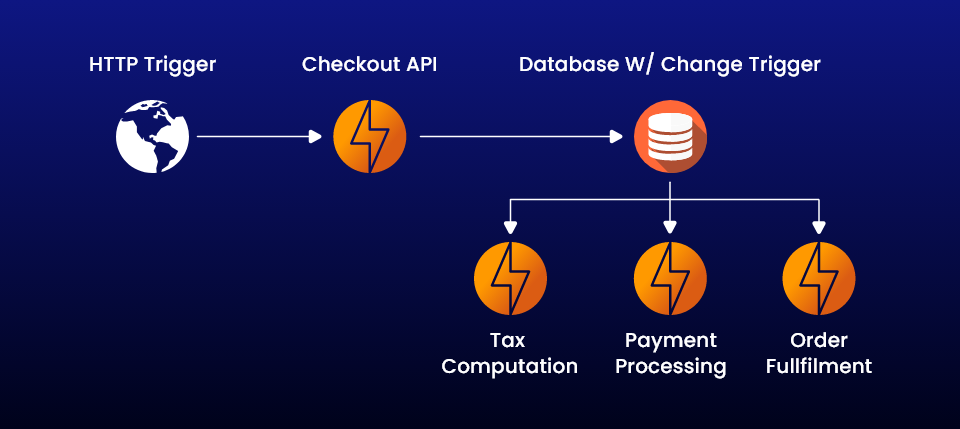

At the heart of serverless lies event-driven execution, where every function is a reaction to an external signal. Picture a relay race where each runner only begins moving when the baton arrives. In a similar fashion, serverless systems activate functions when specific triggers occur—such as uploading a file to cloud storage or receiving a user request via an API gateway.

The beauty of this approach lies in elasticity. Functions scale automatically, responding to thousands of concurrent events without manual intervention. However, this dynamism can become complex when multiple functions interact. Developers must adopt orchestration patterns—like chaining, fan-out/fan-in, and state machines—to maintain control over distributed workflows.

Modern learning programs, such as devops training in chennai, often emphasise these orchestration techniques, helping teams design architectures where logic flows seamlessly across functions without introducing latency or bottlenecks.

Taming the Cold Start Beast

One of the most persistent challenges in serverless environments is the cold start problem—the slight delay that occurs when a function instance initialises for the first time after being idle. While a few hundred milliseconds might seem trivial, in latency-sensitive applications such as financial transactions or real-time analytics, even that delay can break user expectations.

To mitigate cold starts, teams employ several operational strategies:

- Provisioned Concurrency: Pre-warming a fixed number of function instances ensures they are always ready to respond instantly.

- Lightweight Initialisation: Avoid loading unnecessary dependencies or large libraries during startup. The leaner the function, the faster it awakens.

- Lazy Loading: Defer the initialisation of non-critical components until after the first request completes.

- Scheduled Invocations: Periodically invoking dormant functions keeps them “warm” and reduces startup lag.

The key lies in balancing readiness with efficiency—too many pre-warmed instances negate the cost advantage of serverless, while too few risk sluggish performance.

Cost Optimisation: Paying Only for Precision

Serverless computing promises a seductive proposition: you pay only for what you use. But without discipline, that promise can turn deceptive. Like leaving a faucet dripping all night, frequent invocations or poorly optimised functions can rack up costs quickly.

To master cost efficiency, organisations employ several operational patterns:

- Granular Function Design: Break large monolithic functions into smaller, single-purpose functions to avoid unnecessary compute time.

- Event Filtering: Trigger functions only for relevant events instead of processing every message from a stream or queue.

- Right-Sized Timeouts: Define precise execution time limits to prevent runaway costs from infinite loops or delayed external calls.

- Observability and Cost Dashboards: Monitor function invocations, memory usage, and billing metrics continuously to detect anomalies early.

A mature cost optimisation culture transforms serverless from a budgetary gamble into a predictable, scalable investment. This mindset—deeply embedded in operational thinking—forms part of the strategic skills emphasised in professional programs like devops training in chennai, where engineers learn to design not only for performance but also for financial sustainability.

Observability and Resilience in Motion

In traditional systems, monitoring servers provides clear insights into health and performance. In serverless, where infrastructure is abstracted away, visibility must shift from servers to events. This means tracking metrics such as request latency, error rates, invocation counts, and downstream dependencies.

The modern approach involves distributed tracing and centralised logging, where each function emits trace identifiers that allow engineers to reconstruct the journey of a transaction across multiple functions. Tools like AWS X-Ray, Google Cloud Trace, and Azure Application Insights have become indispensable for maintaining observability in these ephemeral environments.

Resilience, too, requires architectural foresight. Patterns such as retry mechanisms, dead-letter queues, and circuit breakers ensure that transient failures don’t cascade into system-wide outages. By designing with failure in mind, teams create systems that recover gracefully without human intervention.

The Human Factor: Automation with Accountability

While serverless abstracts infrastructure management, it amplifies the need for operational discipline. Developers must think not only about writing code but about how that code behaves under scale, failure, and fluctuating demand.

This human dimension transforms DevOps from a maintenance role into one of stewardship—guiding autonomous systems toward stability and efficiency. The cultural shift involves collaboration between teams, where automation is balanced with accountability, and rapid experimentation coexists with careful governance.

Conclusion

Serverless architecture represents the next chapter in cloud evolution—an ecosystem where compute emerges on demand, scales elastically, and disappears when idle. Yet, behind this apparent simplicity lies a world of operational sophistication. Managing cold starts, orchestrating event-driven functions, optimising costs, and maintaining observability are not mere technical details—they are the craft that makes the serverless stage perform flawlessly.

When executed thoughtfully, serverless systems deliver agility, scalability, and cost control unlike any traditional architecture. The future of cloud-native applications will belong to those who master not just the art of writing functions, but the discipline of orchestrating them—one event at a time.